PUMA: RUN MY WAY 管我怎么跑

As part of Puma's Hybrid Astro campaign, we created an interactive pop-up pavilion in one of Shanghai's busiest districts, inviting people to experience the shoes through exploration rather than competition.

Visitors customized their own Puma avatar, then stepped onto a treadmill where their movements powered a journey through three imaginative virtual worlds, celebrating the idea of running your own way.

- Gesture-based Interaction Design

- Physical & digital touchpoint user flows

- 2019

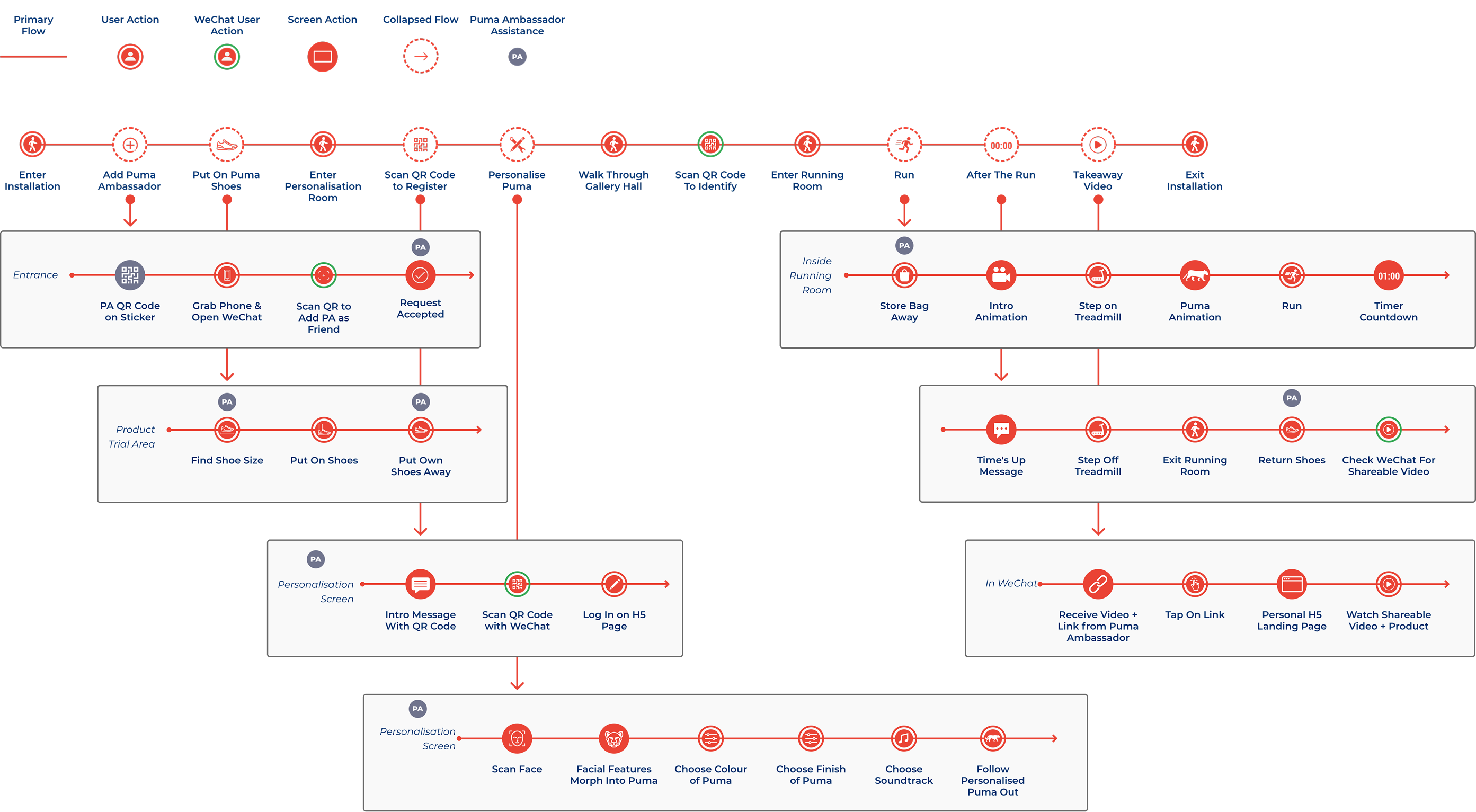

User Flow

The user flow for this project was particularly challenging as multiple iterations were required as the pavilion's floor plan and technology choices changed.

The diagram below is the final flow from entry to exit, with each collapsed section detailing the specific user interactions within the experience.

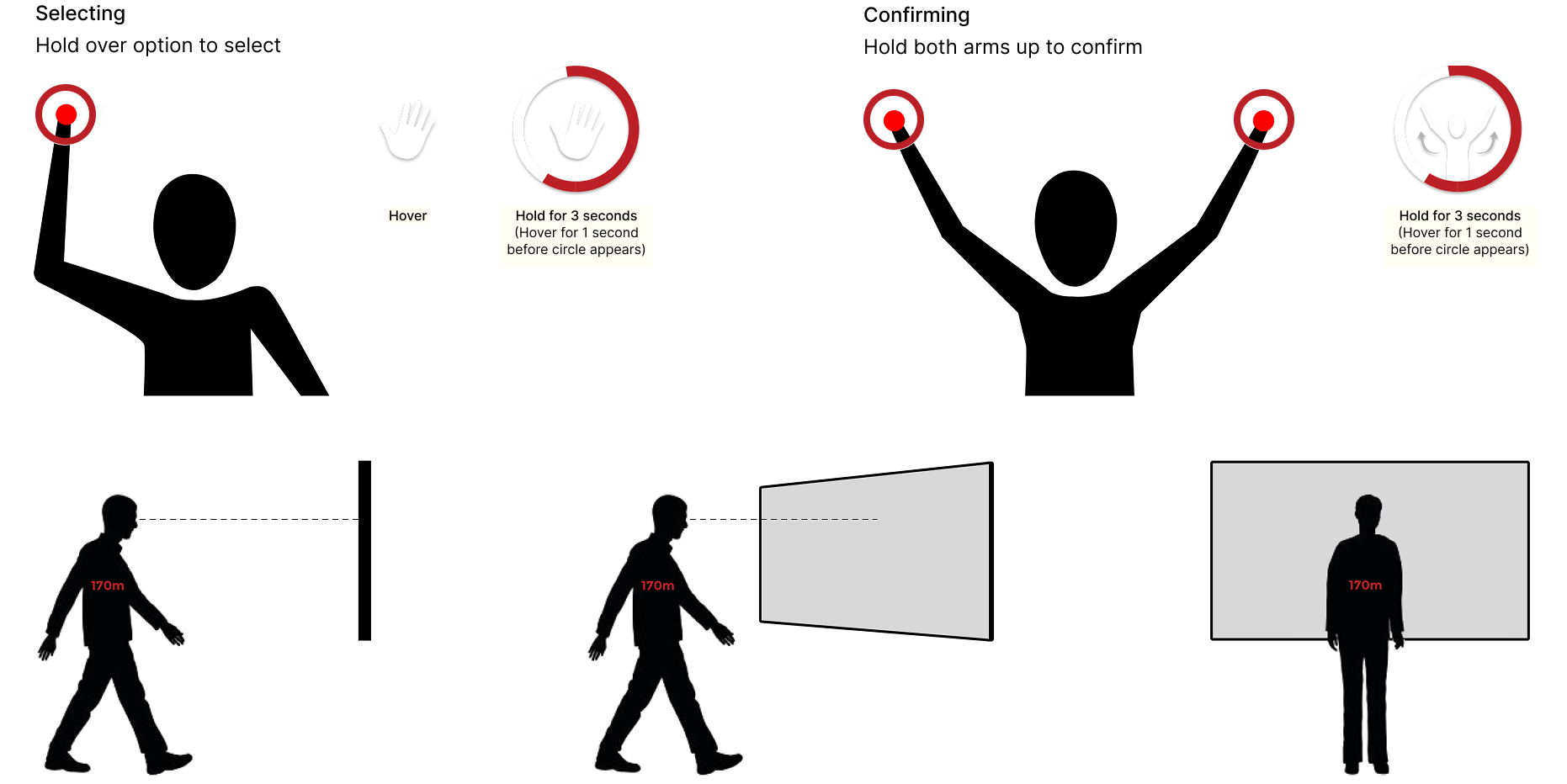

Gesture-based controls

As part of the experience, users started by creating a personalized Puma avatar.

I explored motion gestures to make the experience feel more intuitive, choosing the ones that felt the most natural based on research. I also paid close attention to how everything was laid out, making sure the size, placement, and distance of each component felt comfortable and easy to reach from a standing position.

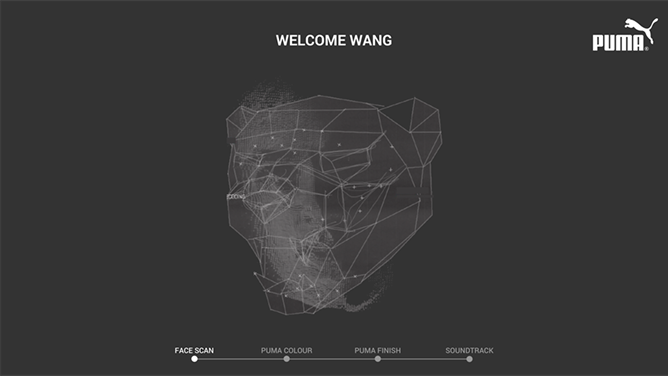

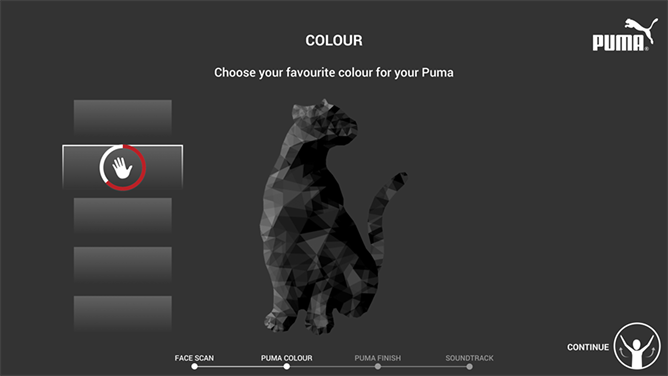

Wireframes

Final Personalization Screens

Reflection

This was my first time designing for gesture-based interactions, and it pushed me to think beyond the screen and consider the physical, 3D space the experience would live in. It was exciting to imagine how visitors would move within the room, how far they'd stand from the sensor, how large the interaction zone needed to be, and how arm movements or hover gestures could be used for selection.

Not having access to the hardware early on made things a bit challenging, but the research phase was genuinely fun and eye-opening. Learning about gesture ranges, detection distances, and ergonomics was a great deep-dive into spatial UI interaction patterns.